In the ever-evolving landscape of artificial intelligence, NVIDIA has made a groundbreaking leap in scaling the performance of ‘Mixture of Experts’ (MoE) models. This advancement addresses one of the most significant challenges in the industry: enhancing performance without hitting a wall in computational resources. By leveraging co-design performance scaling laws, NVIDIA’s latest innovation is set to redefine AI capabilities.

NVIDIA’s AI Cluster Revolutionizes MoE Model Performance

The AI sector has been in a race to enhance foundational large language models (LLMs) by increasing token parameters and optimizing performance. However, this approach often results in significant demands on computational resources. MoE models offer a solution by activating only necessary parameters per token, based on the service request type. Despite their dominance, these models face scalability issues, which NVIDIA has now successfully overcome.

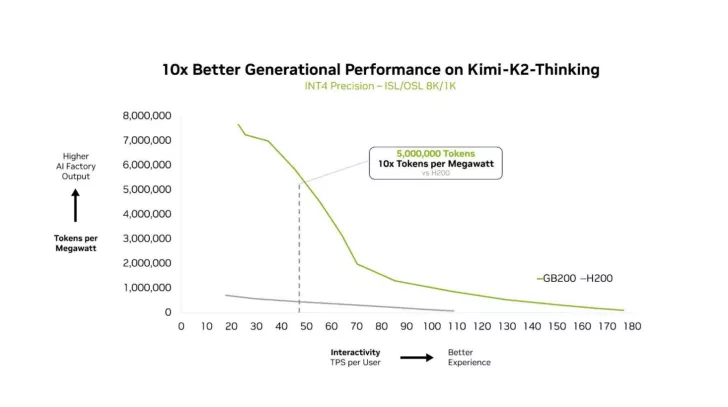

According to a recent press release, NVIDIA’s GB200 ‘Blackwell’ NVL72 configuration has achieved a 10x performance increase compared to the previous Hopper HGX 200 model. Testing on the Kimi K2 Thinking MoE model, a leading open-source LLM with 32 billion activated parameters per forward pass, highlighted the potential of NVIDIA’s new architecture. The Blackwell architecture is set to capitalize on the rise of frontier MoE models.

Enhanced AI Model Efficiency

NVIDIA’s approach leverages a 72-chip configuration with the GB200, combined with 30TB of fast shared memory, to elevate expert parallelism. This ensures efficient distribution and processing of token batches across multiple GPUs, resulting in a non-linear increase in communication volume. Other optimizations include:

Additional full-stack optimizations are crucial for maximizing MoE model inference performance. The NVIDIA Dynamo framework allocates prefill and decode tasks to different GPUs, allowing decode to operate with large expert parallelism, while prefill employs parallelism techniques optimized for its workload. The NVFP4 format enhances both performance and accuracy.

This breakthrough is pivotal for NVIDIA and its partners, as the GB200 NVL72 configuration reaches a critical stage in the supply chain. MoE models, celebrated for their computational efficiency, are increasingly being deployed across diverse environments. NVIDIA seems to be at the forefront of harnessing this growing trend.