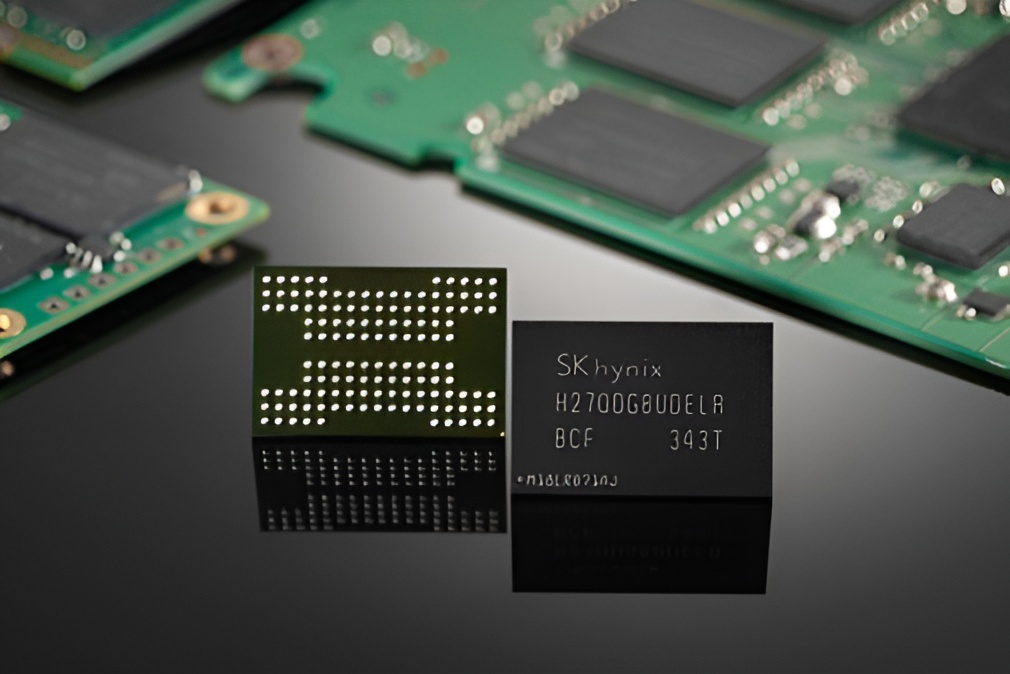

The tech industry is buzzing with news about the development of an inference-optimized AI SSD, driven by advancements in NAND technology. SK hynix aims to unveil this innovative solution by 2027, promising significant improvements for AI workloads.

NVIDIA & SK hynix’s Collaborative Breakthrough in AI Technology

As AI workloads transition from training to inference, it’s crucial to adapt the technology to maintain low latency and high throughput. In light of this, NVIDIA is integrating GDDR7 memory into the Rubin CPX GPU, as seen in recent reports. Similarly, a groundbreaking approach is underway for NAND chips, with NVIDIA and SK hynix co-developing the “Storage Next” SSD. This internal project is set to revolutionize the NAND market.

SK hynix intends to showcase a prototype by next year, with the AI SSD potentially reaching a staggering 100 million IOPS, far surpassing traditional enterprise SSDs. The current AI workload structure demands continuous access to vast model parameters, which surpasses the capabilities of HBM and general-purpose DRAM solutions. The AI SSD aims to create a pseudo-memory layer, tailored for AI workload optimization.

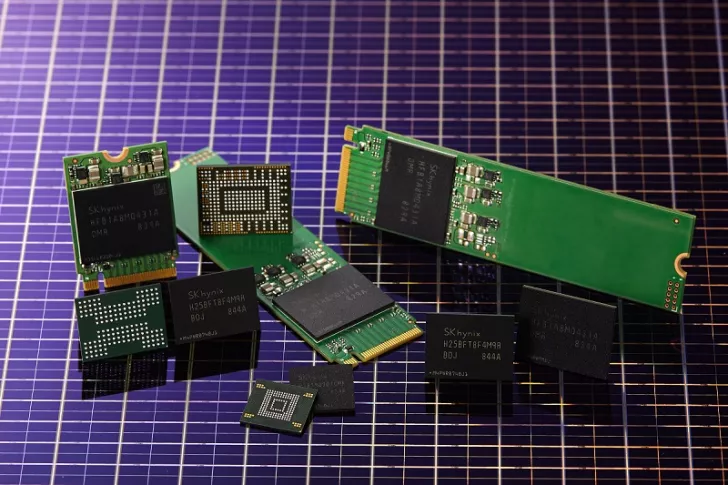

Impact on the NAND Market and Future Implications

Reports suggest that SK hynix, in collaboration with NVIDIA, is pushing forward with the Storage Next project. The focus is on improving throughput and energy efficiency through advanced NAND and controller architectures. Despite the ambitious goals, it’s crucial to consider the current strain on NAND supply lines, exacerbated by storage demands from CSPs and AI leaders. Should the AI SSD become mainstream, a scenario similar to the DRAM market could unfold for NAND Flash chips.

Supporting AI workloads poses challenges, disrupting supply chains and leaving minimal time for consumers and suppliers to adapt. DRAM contract pricing is increasingly volatile, and NAND may soon face a similar situation.