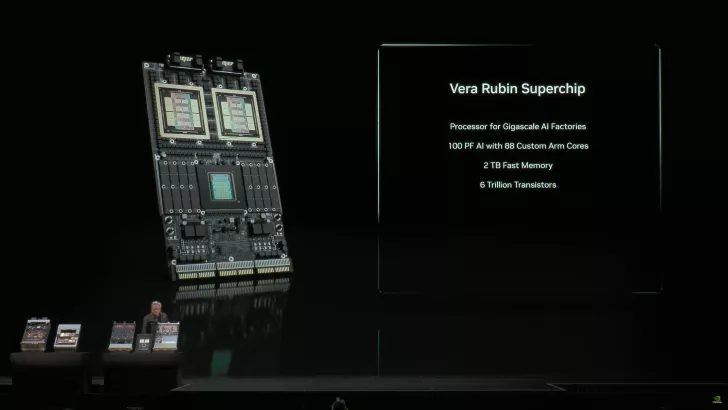

In an exciting revelation at the GTC event in Washington, NVIDIA unveiled the Vera Rubin Superchip, poised to revolutionize the landscape of artificial intelligence. This groundbreaking chip marks a significant leap forward, promising to enhance AI capabilities globally.

NVIDIA Prepares for Mass Production with Rubin GPUs

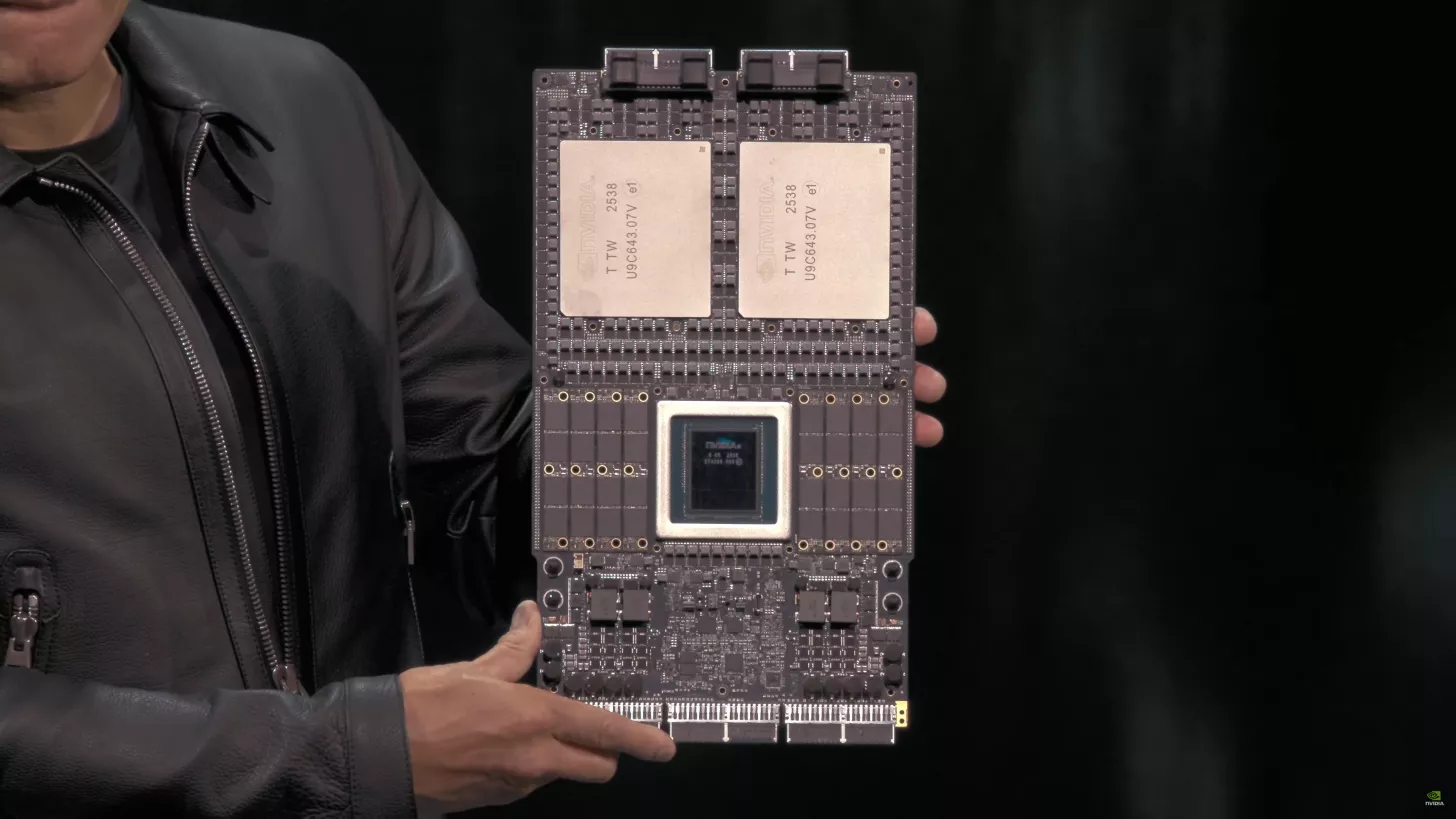

During the GTC October 2025, NVIDIA’s CEO Jensen Huang introduced the world to the Vera Rubin Superchip. Showcasing a real sample for the first time, this Superchip integrates the powerful Vera CPU alongside two enormous Rubin GPUs. The setup boasts abundant LPDDR system memory paired with HBM4 memory in the Rubin GPUs, making for an impressive architecture.

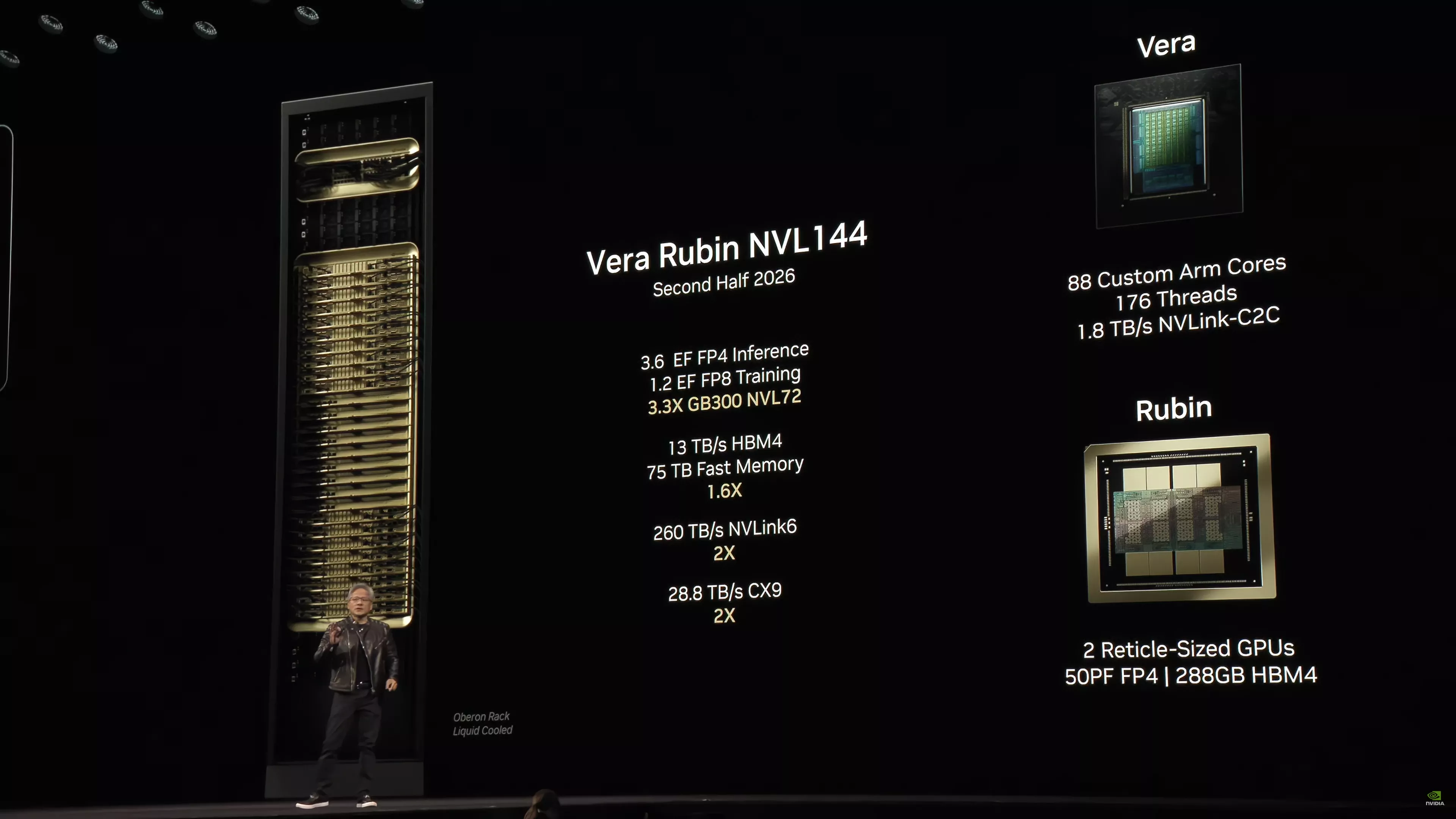

The Upcoming NVIDIA Vera Rubin NVL144 and Ultra Systems

Expectations are high for the NVIDIA Vera Rubin NVL144 platform set to launch in the second half of 2026. The platform will host two new Rubin GPUs, each with two Reticle-sized chips, delivering up to 50 PFLOPs of FP4 performance and equipped with 288 GB of next-gen HBM4 memory. It also includes an 88-core Vera CPU, offering a custom Arm architecture with 176 threads and 1.8 TB/s NVLINK-C2C interconnect.

Performance scaling for the NVL144 shows 3.6 Exaflops of FP4 inference and 1.2 Exaflops of FP8 Training capabilities, representing a 3.3x increase over the GB300 NVL72. The platform features 13 TB/s of HBM4 memory and 75 TB of fast memory, with 60% more power than the GB300 and double the NVLINK and CX9 capabilities, achieving up to 260 TB/s and 28.8 TB/s, respectively.

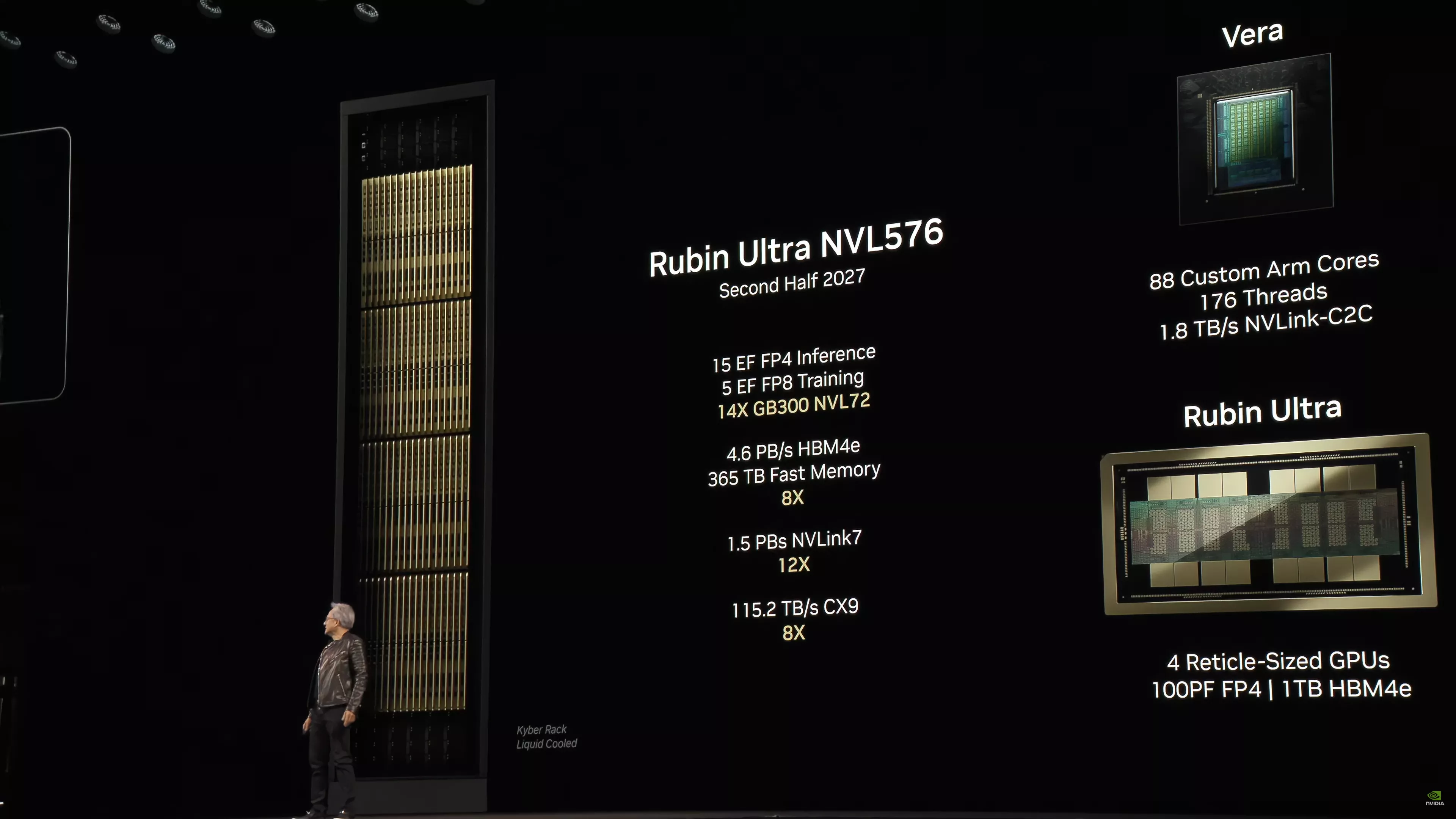

Looking ahead to 2027, the Rubin Ultra NVL576 system will take things further. This platform expands to 576, maintaining the CPU architecture while the Rubin Ultra GPU will contain four reticle-sized chips, offering up to 100 PFLOPS of FP4 and an expansive HBM4e memory of 1 TB across 16 HBM sites. The Rubin Ultra system will provide 15 Exaflops of FP4 inference and 5 Exaflops of FP8 Training, an astounding 14x increase over the GB300 NVL72, with HBM4 memory rates at 4.6 PB/s and fast memory reaching 365 TB.

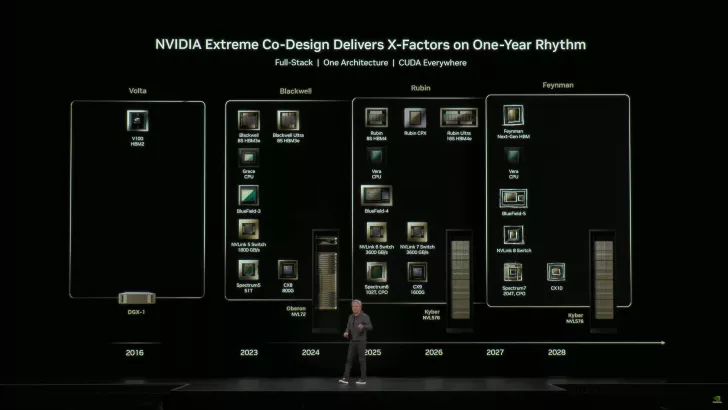

NVIDIA Data Center / AI GPU Roadmap

| GPU Codename | Feynman | Rubin (Ultra) | Rubin | Blackwell (Ultra) | Blackwell | Hopper | Ampere | Volta | Pascal |

|---|---|---|---|---|---|---|---|---|---|

| GPU Family | GF200? | GR300? | GR200? | GB300 | GB200/GB100 | GH200/GH100 | GA100 | GV100 | GP100 |

| GPU SKU | F200? | R300? | R200? | B300 | B100/B200 | H100/H200 | A100 | V100 | P100 |

| Memory | HBM4e/HBM5? | HBM4 | HBM4 | HBM3e | HBM3e | HBM2e/HBM3/HBM3e | HBM2e | HBM2 | HBM2 |

| Launch | 2028 | 2027 | 2026 | 2025 | 2024 | 2022-2024 | 2020-2022 | 2018 | 2016 |