NVIDIA’s Feynman GPUs to Feature Groq’s LPU Units by 2028, Stacked Like AMD’s X3D Dies

NVIDIA is poised to redefine the landscape of AI inference by potentially introducing next-generation Feynman chips that incorporate LPU units. This strategic move aims to position NVIDIA at the forefront of inference technology, leveraging cutting-edge architecture innovations.

NVIDIA’s Strategy with LPU Integration

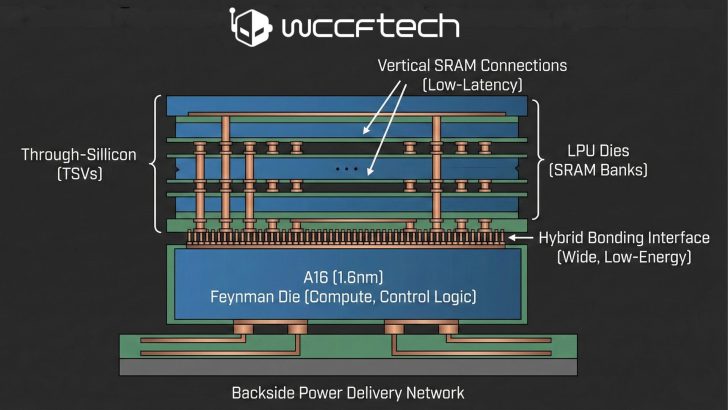

In its quest for technological leadership, NVIDIA may utilize its licensing agreement with Groq’s LPU units to gain a competitive edge. Reports suggest NVIDIA plans to integrate LPUs into its future Feynman GPUs using TSMC’s hybrid bonding technology. This approach echoes the integration strategy seen with AMD’s X3D CPUs, where 3D V-Cache tiles are bonded to the main compute die.

Expert insights suggest that stacking SRAM as a monolithic die is inefficient due to scaling limitations and high production costs. Instead, stacking LPU units on the Feynman compute die could prove more effective. This method is expected to enhance the performance capabilities of the A16 (1.6nm) chips, which will serve as the main Feynman die with integrated compute blocks and separate LPU dies hosting large SRAM banks.

Challenges and Considerations

The integration of LPUs presents significant engineering challenges, particularly concerning thermal management and execution-level implications. The potential bottlenecks due to the high compute density of stacked dies are a concern, as are the conflicts between determinism and flexibility inherent in LPUs’ fixed execution order.

For NVIDIA, the key complication lies in aligning CUDA’s architecture with LPU-style execution, which demands explicit memory placement against CUDA’s hardware abstraction design. Integrating SRAM within AI architectures won’t be an easy task for Team Green, as it would require an engineering marvel to ensure LPU-GPU environments are well-optimized.