In the rapidly evolving AI landscape, a new rivalry is taking shape. While NVIDIA has long been a dominant force, it’s Google that is emerging as a formidable challenger in the AI hardware domain. This rivalry is gaining attention, as Google’s advancements in custom AI chips pose significant competition to NVIDIA’s longstanding leadership.

Google’s journey into AI hardware began in 2016 with the introduction of their first TPU, far ahead of other tech giants like AMD, Intel, and NVIDIA. Recently, Google unveiled the ‘7th-generation’ Ironwood TPUs, capturing industry attention and highlighting the intensifying competition between Google and NVIDIA. Let’s explore how Google’s latest innovations stack up against NVIDIA’s offerings.

Google’s Ironwood TPUs: A New Benchmark in AI Performance

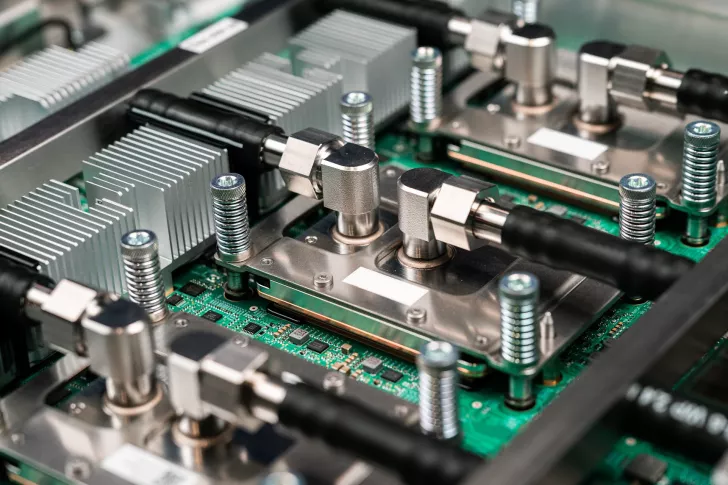

The latest addition to Google’s AI arsenal, the Ironwood TPUs, is set to revolutionize inference-focused computing. These chips promise a groundbreaking shift from model training to inference, equipped with remarkable features such as:

- 10X peak performance improvement over TPU v5p.

- 4X better performance per chip for both training and inference workloads compared to TPU v6e (Trillium).

- The most powerful and energy-efficient custom silicon Google has built to date.

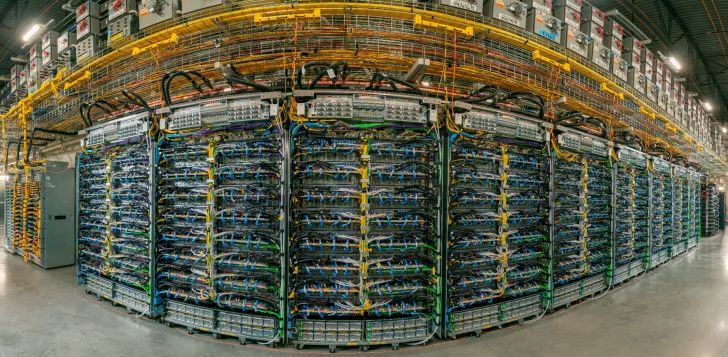

Google’s Ironwood chips are expected to offer significant enhancements, utilizing 192 GB of 7.4 TB/s HBM memory and delivering a staggering 4,614 TFLOPs per chip, marking a 16x improvement over TPUv4. With the Superpod configuration, Google employs 9,216 chips per pod, achieving a cumulative performance of 42.5 exaFLOPS in FP8 compute workloads. This robust setup showcases Google’s superior interconnect solutions, outpacing NVLink in scalability.

Google’s InterChip Interconnect (ICI) enables seamless scalability across 43 blocks of Superpods, connected via a 1.8 Petabytes network and utilizing a 3D Torus layout for effective chip interconnectivity. This approach positions the SuperPod as a disruptive force in AI computing.

Google’s ASIC Aspirations: Challenging NVIDIA’s AI Supremacy

As the AI landscape shifts, Google’s Ironwood TPUs are poised to redefine inference computing. The era of model training has paved the way for inference, which demands not just raw TFLOPS but also metrics like latency, throughput, and cost-efficiency. Google’s Ironwood TPUs offer a compelling advantage, with massive on-package memory and a 9,216-chip SuperPod cluster, potentially surpassing NVIDIA in memory capacity and performance.

Inference demands high efficiency and low latency, areas where Google’s architectural focus delivers promising results. The Ironwood TPUs achieve 2x higher power efficiency, making them an ideal choice for hyperscale inference queries. Google’s strategy of exclusive deployment through Google Cloud could further solidify its competitive edge over NVIDIA.

The competition now revolves around serving more queries with lower latency and power consumption. As Google aims to capture this new axis of competition, NVIDIA faces challenges but is not backing down. With its Rubin CPX GPUs, NVIDIA seeks to maintain its stronghold. However, Google’s advancements are a wake-up call for the industry, signaling a potential shift in AI computing dynamics.